SemEval-2016

Semantic Evaluation Exercises

The task is about the Community question answering (QA) sections in websites, where mostly anyone can post or answer a question. One can consume a lot of time and effort to go through all possible answers and make sense of them.

This created the need for automating that process “by identifying the posts in the answer thread that answer the question well vs. those that can be potentially useful to the user (e.g., because they can help educate him/her on the subject) vs. those that are just bad or useless.”

Thousands of question-answer (QA) pairs from websites like WebTeb, Al-Tibbi, Islamweb, and over 100,000 QA pairs in total from the other two websites were collected for this exercise.

The participants searched the thousands of QA pairs trying to find answers to the WebTeb questions. For the Arabic subtask, the participants had to rerank the correct answers for a new question.

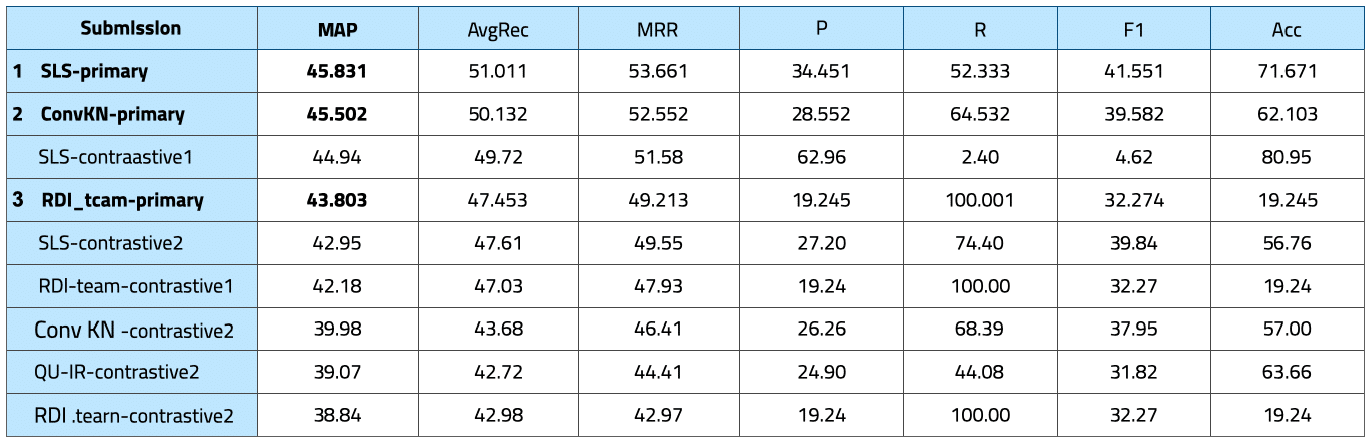

The official evaluation measure used to rank the participating systems is Mean Average Precision (MAP) calculated for the ten comments a participating system has ranked highest.

We further report the results for two unofficial ranking measures: Mean Reciprocal Rank (MRR) and Average Recall (AvgRec).

3rd PLACE WINNER

RDI won 3rd place in the SemEval-2016 Task3: Community Question Answering with MAP of 43.80, which is ranked third also on AvgRec and MRR.

We combined a TF.IDF module with a recurrent language model and information from Wikipedia.